Understanding ACID

Yet another ACID article

Introduction

I know this is “yet another ACID article” but if you don’t learn anything new after reading this, I’ll buy you a drink.

Not so long ago, I built a basic database storage engine. In the near future, I’ll be publishing content about it. It’s going to be very cool.

Until then, let’s understand ACID.

Sponsor

If you want to be better at solving hard problems, and building real stuff, you’re going to love CodeCrafters.

Build your own docker, Git, Redis, and more.

I’m also a member of CodeCrafters. If you want to join you can get a 40% discount.

ACID

It stands for:

Atomicity

Consistency

Isolation

Durability

Atomicity

Atomic refers to something that cannot be broken down into smaller parts. It’s a single unit of work, for example:

insert into users(username, age) values ("johndoe", 29);This is a single unit of work. It either succeeds or fails. There’s no in-between.

That’s easy, of course, but what about this?

insert into orders(user_id, amount) values(1, 199);

insert into order_items(order_id, product_id) values(1, 1153);

insert into order_items(order_id, product_id) values(1, 792);What happens if the last insert fails? The database is left in an inconsistent state because these operations were not executed as a single atomic unit.

In relational databases, transactions give us atomicity by “wrapping” queries and treating them as one unit:

start transaction;

insert into orders(user_id, amount) values(1, 199);

insert into order_items(order_id, product_id) values(1, 1153);

insert into order_items(order_id, product_id) values(1, 792);

commit;These three queries are treated as one single atomic unit.

Right?

No.

For a long time, I thought if I use start transaction it’s somehow becomes atomic. But it’s not true. Let’s put an invalid value into the second insert:

insert into order_items(order_id, product_id) values("ysdf", 1153);And now run all three queries in a transaction:

You can see logs like Query OK, 1 row affected. The first and the last insert statements are executed. Even though, there was an error in the middle, two rows were inserted.

In my experience, lots of developers think that if something fails the database is reverted back to its initial state by just adding a “start transaction:”

start transaction;

insert into orders(user_id, amount) values(1, 199);

insert into order_items(order_id, product_id) values(1, 1153);

insert into order_items(order_id, product_id) values(1, 792);

commit;But we’ve seen that it’s not true. So let’s fix the query:

start transaction;

insert into orders(user_id, amount) values(1, 199);

if row_count() <= 0 then

rollback;

select "error while creating order";

show errors;

end if;

set @order_id = last_insert_id();

insert into order_items(order_id, product_id) values(@order_id, 1153);

if row_count() <= 0 then

rollback;

select "error while creating order item";

show errors;

end if;

insert into order_items(order_id, product_id) values(@order_id, 792);

if row_count() <= 0 then

rollback;

select "error while creating order item";

show errors;

end if;

commit;

select 'done';We just found a language that’s error handling is more annoying then Golang’s

The missing piece was rollback. If you want full control over the state of your DB you need to check every operation and rollback if needed.

Usually, we handle these in the backend code instead of MySQL itself, but it's still interesting to look at it.

row_count returns the number of affected rows by the last executed statement. It can be an insert or an update. Or a delete:

If you liked this meme, tell your friend about it and get a 1-month premium subscription for free.

After each insert, we check if row_count() returns a value less than or equal to zero and we rollback.

Now these three queries are really form a single, atomic unit. Either all of them succeeds or none of them. Protecting the state of your DB.

But of course, usually we handle this in code:

public function store(Request $request, OrderService $orderService)

{

$order = DB::transaction(function () {

$order = $orderService->create($request->user(), $request->amount);

foreach ($request->items as $item) {

$orderSerivce->addItem($order, $item);

}

return $order;

});

return OrderResource::make($order);

}That’s it. An atomic “store” function. If an Exception is thrown the DB is reverted.

Consistency

Consistency is a little bit tricky.

It means that the DB transitions from one valid state to another valid state after a transaction.

The question is what is a “valid state” and how the DB can enforce it?

Foreign keys

One of the fundamentals concepts of a relational database is foreign keys. So use them. They ensure that only valid values can be inserted into a foreign key column. It enforces “valid state” in the context of relationships.

Unique constraints

Also a very important concept in relational databases. It guarantees that you don’t have duplicated data in a situation where you are not supposed to have duplicated data.

In my experience, people don’t use comopsite unique indexes enough. For example, take a look at this table called post_likes:

The user_id and post_id columns should be a unique composite index since one user can only like a post once:

create unique index `user_id_post_id_idx` on `post_likes` (`user_id`, `post_id`)Data types and modifiers

It’s obvious that you cannot inert a string value into an integer column and it’s a good. But more importantly we can use data modifiers (such as unsigned) to enforce “valid state” and data integrity:

unsigned can be used to enforce basic business rules at the database level. For example, if you have a balance column that you execute additions and subtractions on, an unsigned modifier guarantess it always stays positive, even if you have a bug in your system.

varchar(100). Limiting the length of a column also ensures integrity and consistency even if your validation logic accepts an invalid string. The DB engine simply returns an error and rolls back the database.

NOT NULL guarantees you don’t have empty values in required columns.

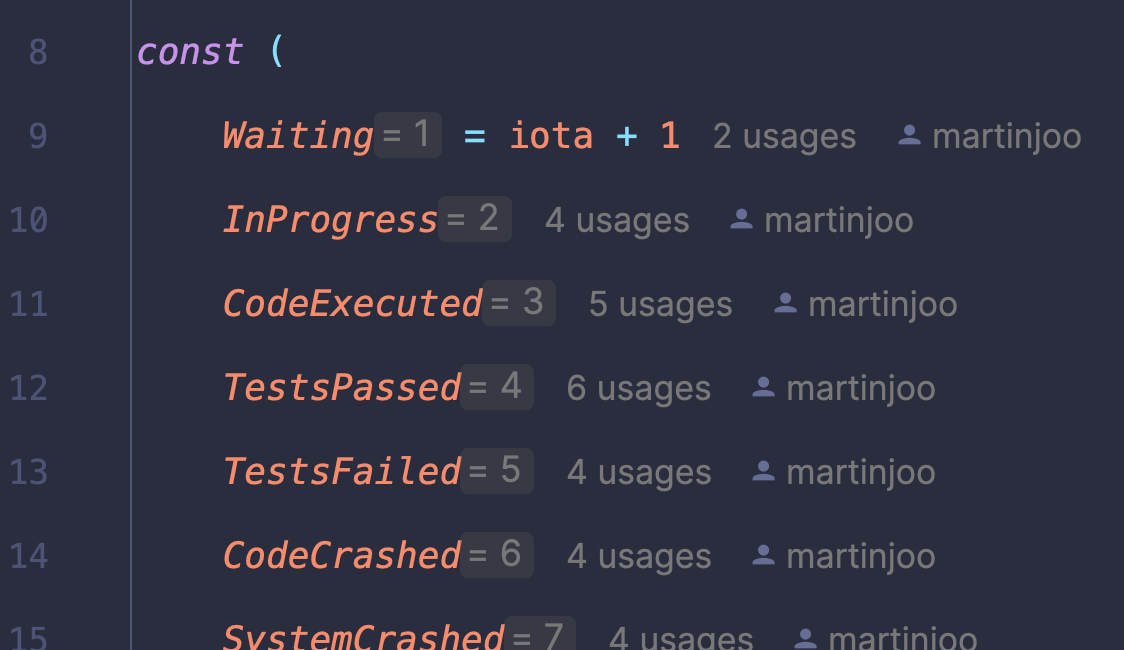

Reasonable default values can also be very useful. For example, whenever I have a “status” or “state” column I also add the initial status as the defaul value:

create table orders (

id bigint unsigned primary key,

status varchar(32) default 'pending',

created_at timestamp default current_timestamp

);check constraints

The next one is sort a gray-area. You can do this in MySQL:

create table employees (

id bigint unsigned primary key,

name varchar(100) not null,

email varchar(100) check (email LIKE '%@%.%'),

age smallint CHECK (age between 18 and 65),

salary decimal(10,2) unsigned

department_id int check (department_id in (select id from departments)),

hire_date date check (hire_date > '2000-01-01')

);You can basically write validation rules with the check keyword if you’re using MySQL8. This is useful, but it’s very “hidden.” Meaning, developers don’t naturally check the table definition for validation rules. They don’t accept the database to run e-mail validation rules, etc. (by the way, triggers have the same problem, in my opinion, so they are not included in this article). These checks are usually done by a framework or package on the HTTP level.

In some cases, however, I can see usefulnes in checks. Let’s say your app tracks some kind of balance. It can be negative, but only to a certain amount, let’s say -10,000 is the limit. If this is an important business rule, then I see value in doing this:

balance int not null check (balance >= -10000)This should not be your only validation, of course. It should be your last “safety net.”

At the beginning, I said consistency is tricky. It’s tricky because it exists on two levels:

Database

Application

The DB can help you with these features but still need to handle at least thw following:

Validate your data properly

Handle errors properly

Use transactions when it makes sense

Use some locking mechanism (optimistic, pessimistic)

Use audit logs or event streams. Or event sourcing.

Regulary back up your system (database, redis, user uploaded files, .env files)

Check data integrity and business rules

If you have statuses and transitions you can use state machines, for example

If you calculate something (such as a financial formula) you should assert the input variables

Most of your API should be idempotent

If you run a big migration and you delete an old table don’t actually delete the it. Just rename it to

orders_backupfor example. You can delete it later if the release was successful.You can run periodic checks in certain cases. For example, a background job that check that

balancecolumn and notifies you if something went wrong.If you cache your data, always use a TTL (time-to-live) by default. Redis (or some other system) will automatically delete your key when the TTL passes. If you think 1 hour is a good TTL for a specific cache item, set it to 30 minutes by default.

The list goes on but we’re leaving the territory of database systems. Other techniques, such as defensive programming exists to defend you againts these kinds of errors:

Isolation

What happens if two DB clients accesses the same record at the same time and they both modify it in a different way?