Idempotent APIs and safe retries

Introduction

I’ve read an interesting article written by Neo Kim called How Stripe Prevents Double Payment Using Idempotent API.

It describes how Stripe uses idempotent APIs to avoid double payments and other mistakes.

In the first part of this post, I’m going to summarize the original post and then we’re going to build a basic idempotent API that emulates how Stripe works.

Idempotent APIs

An idempotent API guarantees that you can invoke it as many times as you want and it won’t have side effects. In other words, making the same request multiple times does not change the outcome beyond the initial one.

The most obvious example is any HTTP DELETE request. Deleting a resource multiple times has the same effect as deleting it once (after the first deletion, the resource no longer exists).

By definition, the following HTTP verbs should be idempotent:

GET: fetching a resource does not change the state at all

PUT: updating a resource with the same data multiple times results in the same state. PUT should be used when you “replace” the whole resource. For example, a user submits an update form with all of the resource’s properties.

DELETE: deleting a resource multiple times has the same effect as deleting it once

Why is this important at all?

Mostly because of network errors. What happens if the request is processed but the network connection fails and the client doesn’t get a response? It might retry the request later. What happens if your API is not idempotent? You just charged the customer twice (in the case of Stripe).

What happens if the request fails while the server processes it? The client might retry it later. What happens if your API is not idempotent? You just charged the customer twice.

The two most important things when implementing robust, idempotent APIs are idempotency keys and ACID databases (transactions).

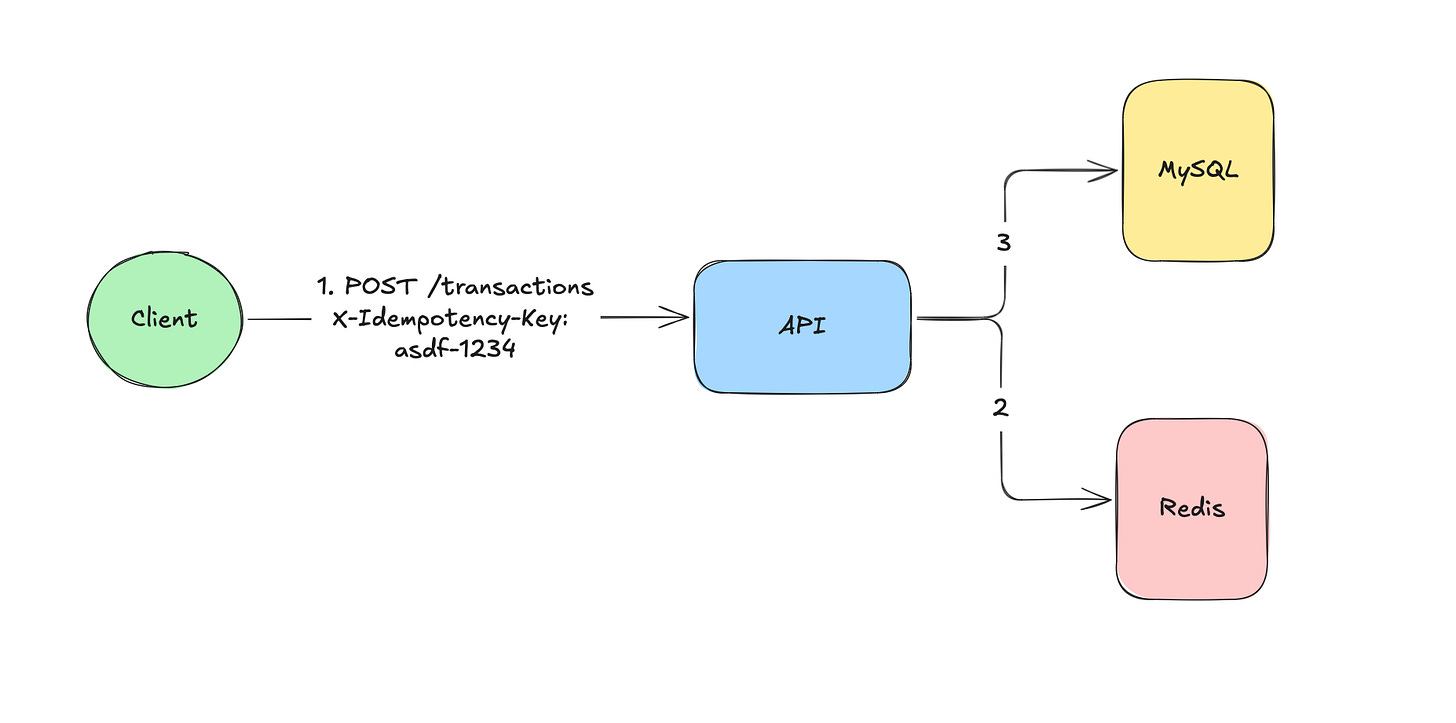

Here’s the flow we’re going to implement:

The client sends a UUID in the request headers. This is the idempotency key.

Any time the request data changes the idempotency key must change too. For example, if the user submits a form, and then changes something and submits it again, it’s not the same request as the first one. It must have a new UUID as well.

Whenever a request comes in the API checks if the UUID has already been used. It uses an in-memory store (Redis). If yes, it returns the result from the store.

Otherwise, it processes the request and stores the ID in Redis. While processing the request, it uses transactions to guarantee data consistency.

It looks like this:

Implementing a sync API

For this demonstration I’m going to use a Transaction API with only one endpoint:

POST /api/transactions

{

"amount": 990,

"product_id": 398

}Each request must include an X-Idempotency-Key header with a UUID. This is the Request object:

class StoreTransactionRequest extends FormRequest

{

public function rules(): array

{

return [

'amount' => ['required', 'numeric'],

'product_id' => ['required', 'numeric'],

'idempotency_key' => ['required', 'uuid'],

];

}

}Since idempotency_key is a header, we need to use the prepareForValidation method:

protected function prepareForValidation()

{

$this->merge([

'idempotency_key' => $this->getIdempotencyKey(),

]);

}This will add the key idempotency_key to the request data so it can be validated just like a body parameter. I added a small helper that returns the header value:

public function getIdempotencyKey(): string

{

return $this->headers->get('X-Idempotency-Key');

}The following steps need to be done in the controller:

Checking if the idempotency key has already been stored in Redis

Returning the value from Redis if the key exists

Creating the transaction and storing it in Redis if it does not exist

namespace App\Http\Controllers;

class TransactionController

{

public function store(

StoreTransactionRequest $request,

Redis $redis,

) {

$idempotencyKey = $request->getIdempotencyKey();

$value = $redis->hGet('requests', $idempotencyKey);

if ($value !== false) {

return response([

'data' => json_decode($value),

], Response::HTTP_OK);

}

}

}For the data structure, I went with a hash map that looks like this:

It’s one big map where the keys are the idempotency keys and the value is a JSON-encoded string of the transaction details. If the given key exists in the hash the Controller returns the JSON-decoded value.

The next part creates and stores the new transaction in Redis:

$result = DB::transaction(function () use ($request) {

return Transaction::create([

'user_id' => $request->user()->id,

'amount' => $request->amount,

'product_id' => $request->product_id,

]);

});

$redis->hSet('requests', $idempotencyKey, json_encode($result));

return response([

'data' => $result,

], Response::HTTP_OK);Of course, in a real-world application, there would be more steps inside the transaction. There’s no point in beginning a transaction for a single query, but it’s just a simple example.

After the transaction is created it is stored in the Redis hash.

If you now send the following request 10 times, only one transaction will be created:

curl --request POST \

--url http://127.0.0.1:8000/api/transactions \

--header 'Authorization: Bearer <YOUR TOKEN>' \

--header 'Content-Type: application/json' \

--header 'X-Idempotency-Key: 9b57a761-757a-4552-aaa9-0f5590688932' \

--data '{

"amount": 1003,

"product_id": 15

}

'If you change the UUID another transaction is created because it is now a new request.

This means that the POST /api/transactions API is idempotent and supports safe retries. In other words, it avoids double charges.

Implementing an async API

The above example works as expected. However, most payment processors, banks, etc use an async API to handle payments.

Fortunately, it’s very easy to refactor the existing code to an async implementation. This is what the Controller looks like:

public function store(StoreTransactionRequest $request)

{

$idempotencyKey = $request->getIdempotencyKey();

$transactionData = TransactionData::from([

'user_id' => $request->user()->id,

...$request->all(),

]);

StoreTransactionJob::dispatch(

$idempotencyKey,

$transactionData,

);

return response([

'data' => [

'idempotency_key' => $idempotencyKey,

],

], Response::HTTP_ACCEPTED);

}I added a TransactionData DTO so the Controller can pass data to the Job without using type-less associative arrays.

After the Controller dispatches the Job, it returns an HTTP 202 Accepted and the idempotency key. This is crucial because, with the key, the client can send subsequent requests to get the status of the transaction.

The status API can be really simple:

public function status(string $idempotencyKey, Redis $redis)

{

$value = $redis->hGet('requests', $idempotencyKey);

if ($value === false) {

return response([

'data' => []

], Response::HTTP_ACCEPTED);

}

$data = json_decode($value, true);

return response([

'data' => Transaction::findOrFail($data['id']),

]);

}If the idempotency key is not found in the hash map 202 is returned to the client. You can argue that a 404 would be more appropriate. However, I don’t think it’s an error. It just means the transaction has not been processed yet.

Finally, the StoreTransactionJob is very straightforward:

public function handle(Redis $redis): void

{

$value = $redis->hGet('requests', $this->idempotencyKey);

if ($value !== false) {

return;

}

$response = DB::transaction(function () {

return Transaction::create([

'user_id' => $this->transactionData->userId,

'amount' => $this->transactionData->amount,

'product_id' => $this->transactionData->productId,

]);

});

$redis->hSet(

'requests',

$this->idempotencyKey,

json_encode($response),

);

}We’ve already seen this code. It is moved from the Controller.

If you don’t want to build a poll-based system where clients must send requests to the status endpoint, you can push the status from the job instead. For example, you can send SSE or WebSocket events to clients.

One of the many advantages of an async API is that it’s easier to implement retries. You don’t need to change the client, only the job:

class StoreTransactionJob implements ShouldQueue

{

use Queueable;

public int $tries = 3;

public array $backoff = [30, 60];

}$tries means we want to run the job three times if it fails. $backoff = [30, 60] means that the second try is going to happen 30s after the first one. The third try will happen 60s after the first one. So we try to run this job three times for 90s if something fails. These are quite high backoff values. Of course, only experience and trial and error can give you the exact numbers that work best for your project.

If you liked this post, don’t forget to subscribe

Greate article! Thank you

Excellent article, one classic concurrency caveat though: what happens if the the same request (same X-Idempotency-Key) arrives just after the transaction is committed and the hSet call? In this case we charge the customer twice. Very small chance, but this is what we are talking here, edge cases :).

The same can happen between hGet and the processing step.

HSETNX can be used instead of hGet + hSet.

For SQL databases, a SELECT FOR UPDATE could be used as an alternative.

Another approach is to use a Laravel Cache lock: https://laravel.com/docs/11.x/cache#atomic-locks