In the last 13 years, I've used many programming languages, frameworks, tools, etc. One thing became clear: relational databases outlive every tech stack.

Let's understand them!

Building a database engine

Even better. What about building a database engine?

In fact, I've already done that. And wrote a book called Building a database engine.

You can already pre-order it if you want to support me 🙏

It's going to be available on the 22nd of April. It discusses topics like:

Storage layer

Insert/Update/Delete/Select

Write Ahead Log (WAL) and fault tolerancy

B-Tree indexes

Hash-based full-text indexes

Page-level caching with LRU

...and more

Check it out and pre-order to get the best price:

The following article comes from the book.

Introduction

What happens if the server crashes while inserting a record into a table? What happens if you run 10 inserts but the 4th one fails?

The database is probably left in an inconsistent state. Or the table file is corrupted. Meaning, only part of the data was written successfully and we left the file in an invalid state.

These are extremely hard problems to solve. I guess database developers spent years on solving these consistency and fault tolerance related problems. They came up with ACID that consist of lots of different techniques.

Our database is not gonna be a very reliable one. Which is okay. It's just a toy project. But we can add a simplified version of WAL.

One of the most important fault tolerance mechanisms is WAL or write-ahead log. This is how it works:

When an operation is executed it is written to a write-only log. This is the WAL.

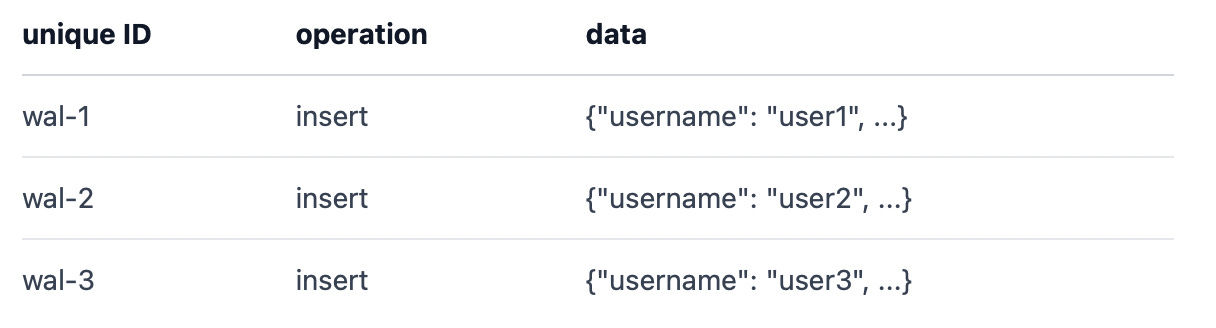

Each log entry has attributes like:

Unique ID

Operation type (insert, delete, update)

Data (the newly inserted row, for example)

When the operation finishes the WAL is written to disk. But not to the table file. There's a separate file for the WAL itself.

Once the data is safe in the log file it is updated in the table files.

Real database engines use WAL together with transaction. So the real flow looks like this:

The transaction starts

Each operation is written to an in-memory WAL

The transaction finishes

The in-memory WAL is writte to a log file

Changes are recorded in the table files

This means that the WAL file contains the most updated state at any moment. If, for some reason, writing to the table file fails, the WAL still contains the lost data.

What happens if the server crashes while writing to the table file? At the next startup, data can be restored from the WAL file.

Write-ahead logs help us develop more fault tolerant systems. It is used in many other cases:

Virtually every database engine

Distributed systems such as Kafka or Kubernetes

Journalig file systems

Message queues

Redis and other cache system

git

and the list goes on

But what happens if something goes wrong while writing to the WAL file? That's behind my comprehension.

Simplified version

We'll implement a simplified version of WAL:

Only inserts will be recorded

There's not going to be an in-memory WAL, only the file

No transactions

We'll have a dedicated WAL to every table. So there are two files per table:

users.bin (the actual table file)

users_wal.bin (the write-ahead log for users)This is much easier than putting everything into the users.bin file.

This how the process works:

Before writing to the table file, the engine will append the new record to the log file

This new log entry has a unique ID

It writes the data to the table file (this is the insert we've already discussed)

Then it "commits" the ID

The last step is very important and it has nothing to do with tranactions.

There are two branches the execution can take:

The

insertis executed successfullyIt fails

Successfull insert

Let's imagine this scenario with pseudo-code:

insert(record) {

uniqueID = wal.append(record)

file.write(record)

wal.commit(uniqueID)

}append writes the record and some metadata to the write-ahead log:

Let's say user3 is the record we want to insert. It is now appended to the log.

Next, the function writes user3 into the table file.

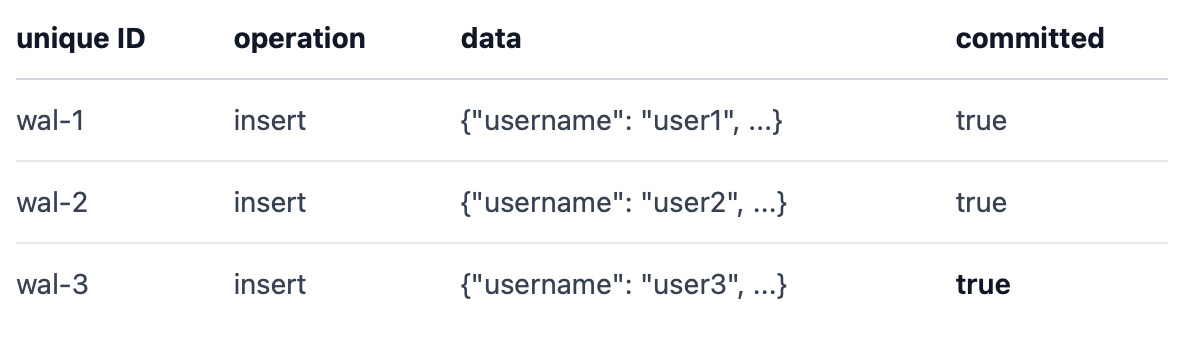

As the last step, we need to "commit" the ID wal-3 because we know it is successfully written to the table file. So at the next startup wal-3 doesn't need to be restored. It is safe.

For now, you can imagine commit as a flag in the log file:

In other words "committed" means "is it written to the table file as well?"

When wal.append first appends the row, committed is false. And then wal.commit sets it to true.

Failure

Now let's see what happens when things don't work out as expected:

insert(record) {

uniqueID = wal.append(record)

err = file.write(record)

if err != nil {

return err

}

wal.commit(uniqueID)

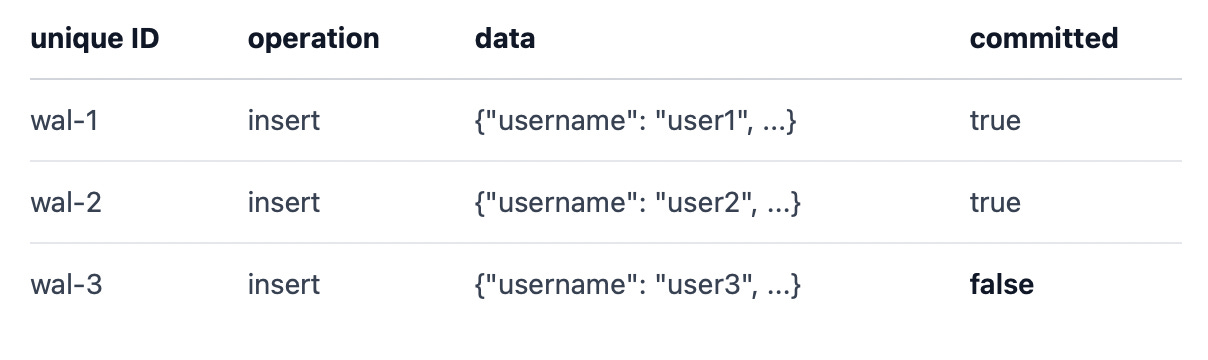

}The record is appended to the log with commited=false

Then file.write fails so the record is not written to disk and the log entry is not committed.

At the next startup, the engine checks uncommitted entries and tries to persist them into the table file:

startup() {

wal.restore()

}

// in the WAL package

restore() {

// returns wal-3

entries := getUncommittedEntries()

for e := range entries {

insert(e.data)

}

}It reads uncommitted entries from the WAL file and inserts them into the table.

This is an oversimplified version of WAL. For example, partial write errors can still happen. Meaning, file.write writes some bytes and then fails. In this case, the table file is left in a bad state.

But this simplified version provides a minimum fault tolerance.

Building a database engine

This whole article comes from my book - Building a database engine

It's going to be available on the 22nd of April. It discusses topics like:

Storage layer

Insert/Update/Delete/Select

Write Ahead Log (WAL) and fault tolerancy

B-Tree indexes

Hash-based full-text indexes

Page-level caching with LRU

...and more

Check it out and pre-order to get the best price:

Append

This is the WAL object:

const (

FilenameTmpl = "%s_wal.bin"

LastIDFilenameTmpl = "%s_wal_last_commit.bin"

)

type (

WAL struct {

file *os.File

lastCommitFile *os.File

}

)It has a file and a lastCommitFile. In the previous chapter, I introduced the committed flag. I lied to you. In the real implementation, we're going to store the last committed ID in a separate file. Later, I'll explain why.

This means each table has three files:

users.bin (the actual table file)

users_wal.bin (the write-ahead log for users)

users_wal_last_commit.bin (the ID of the last commit)Once again, I'm keeping three files to simplifiy our lives. In reality, it should be one users.bin file.

There's an exported (public) Append function:

func (w *WAL) Append(op, table string, data []byte) (*Entry, error) {

id, err := generateID()

if err != nil {

return nil, fmt.Errorf("WAL.Append: %w", err)

}

if _, err := w.file.Seek(0, io.SeekEnd); err != nil {

return nil, fmt.Errorf("WAL.Append: %w", err)

}

}It takes three arguments:

opis the operation such "insert"tableholds the table name in which the change happened. This is not necessary, because we have a dedicated WAL file for each table but each WAL entry will store the table name as well.datais the actual record that is being inserted. It's the TLV encodied byte representation of the record. Since we only handleinserts a byte array can used to represent the data. Upate or delete would require a more complicated object with the old data and new data, for example.

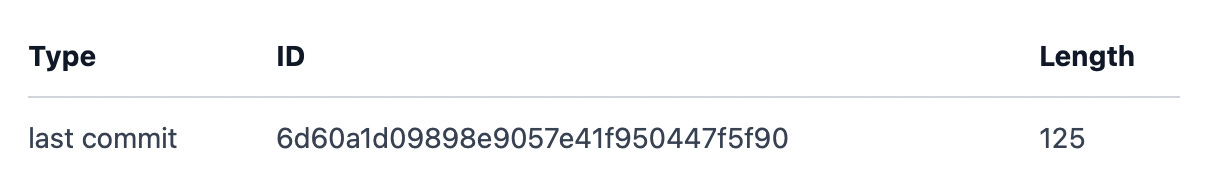

generateID returns a random, unique 32-character long string, such as:

6d60a1d09898e9057e41f950447f5f90This is going to be the unique ID for each log entry.

Next, the function seeks the file to the end. This is important. WAL is an append-only log file so each new entry is appended to the end.

The next step is marshaling the data into a TLV encoded byte sequence. Even though, it's "just" a log, we still use TLV:

marshaler := walencoding.NewWALMarshaler(id, op, table, data)

buf, err := marshaler.MarshalBinary()

if err != nil {

return nil, fmt.Errorf("WAL.Append: %w", err)

}There's a WALMarshaler object that does the job. It works like any marshaler we've seen so far:

type WALMarshaler struct {

ID string

Table string

Op string

Data []byte

}

func (m *WALMarshaler) MarshalBinary() ([]byte, error) {

// ...

}It accepts the to-be-encoded data as its fields and implements the MarshalBinary function.

At this point, you have seen enough marshaling logic, so I skip the boring part. It uses the same objects and functions from earlier chapters. For example:

func (m *WALMarshaler) MarshalBinary() ([]byte, error) {

buf := bytes.Buffer{}

typeMarshaler := encoding.NewValueMarshaler(types.TypeWALItem)

typeBuf, err := typeMarshaler.MarshalBinary()

if err != nil {

return nil, fmt.Errorf("WALMarshaler.MarshalBinary: %w", err)

}

buf.Write(typeBuf)

// ...

return buf.Bytes(), nil

}It encodes the data and returns a TLV byte sequence. If you don’t what TLV is check out my previous post.

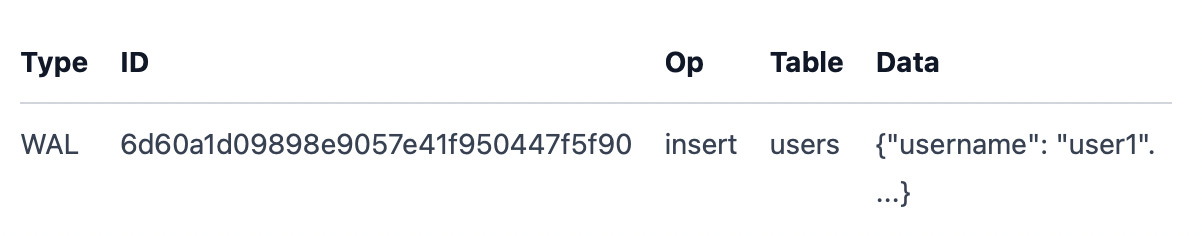

The content this Marshaler produces can be represented as this table.

After data is encoded, it needs to be written into the file:

if err = w.write(buf); err != nil {

return nil, fmt.Errorf("WAL.Append: %w", err)

}

return newEntry(id, buf), nilwrite is very simple helper function:

func (w *WAL) write(buf []byte) error {

n, err := w.file.Write(buf)

if err != nil {

return fmt.Errorf("WAL.write: %w", err)

}

if n != len(buf) {

return fmt.Errorf(

"WAL.write: incomplete write. expected: %d, actual: %d",

n,

len(buf)

)

}

return nil

}It writes to file.

And finally the function returns an instance of Entry:

Entry struct {

ID string

Len uint32

} It holds the generated random ID, and the length of the entire log enrtry. Length will be important later.

As you might expected, Append wasn't complicated at all:

func (w *WAL) Append(op, table string, data []byte) (*Entry, error) {

id, err := generateID()

if err != nil {

return nil, fmt.Errorf("WAL.Append: %w", err)

}

if _, err := w.file.Seek(0, io.SeekEnd); err != nil {

return nil, fmt.Errorf("WAL.Append: %w", err)

}

marshaler := walencoding.NewWALMarshaler(id, op, table, data)

buf, err := marshaler.MarshalBinary()

if err != nil {

return nil, fmt.Errorf("WAL.Append: %w", err)

}

if err = w.write(buf); err != nil {

return nil, fmt.Errorf("WAL.Append: %w", err)

}

return newEntry(id, buf), nil

}Append is called in Table.Insert. Before writing to the table file:

func (t *Table) Insert(record map[string]interface{}) (int, error) {

if _, err := t.file.Seek(0, io.SeekEnd); err != nil {

return 0, fmt.Errorf("Table.Insert: %w", err)

}

if err := t.validateColumns(record); err != nil {

return 0, fmt.Errorf("Table.Insert: %w", err)

}

buf := bytes.Buffer{}

// ...

walEntry, err := t.wal.Append(

walencoding.OpInsert,

t.Name,

buf.Bytes(),

)

if err != nil {

return 0, fmt.Errorf("Table.Insert: %w", err)

}

n, err := t.file.Write(buf.Bytes())

if err != nil {

return 0, fmt.Errorf("Table.Insert: %w", err)

}

return 1, nil

}It calls Append before writing to the file.

Now everytime an Insert happens a new log entry will be created in <table>_wal.bin:

The WAL file is created or loaded in WAL's constructor. An instance of WAL is created for each table. And of course, Table has a field of type WAL:

type Table struct {

Name string

file *os.File

columnNames []string

columns Columns

reader *platformio.Reader

recordParser *parser.RecordParser

columnDefReader *columnio.ColumnDefinitionReader

wal *wal.WAL

}Commit

Commit takes an Entry (created by Append) encodes it to TLV and writes it to <table>_wal_last_commit.bin:

func (w *WAL) Commit(entry *Entry) error {

marshaler := walencoding.NewLastCommitMarshaler(entry.ID, entry.Len)

data, err := marshaler.MarshalBinary()

if err != nil {

return fmt.Errorf("WAL.Commit: %w", err)

}

err := os.WriteFile(w.lastCommitFile.Name(), data, 0644)

if err != nil {

return fmt.Errorf("WAL.Commit: %w", err)

}

return nil

}It couldn't be simpler than this.

The only unusual thing is this:

if err := os.WriteFile(w.lastCommitFile.Name(), data, 0644); err != nil {

return fmt.Errorf("WAL.Commit: %w", err)

}Instead of:

w.lastCommitFile.write(buf)os.WriteFile will override the file' content. This is exactly what we need. The last commit file always contains one row. The last commit.

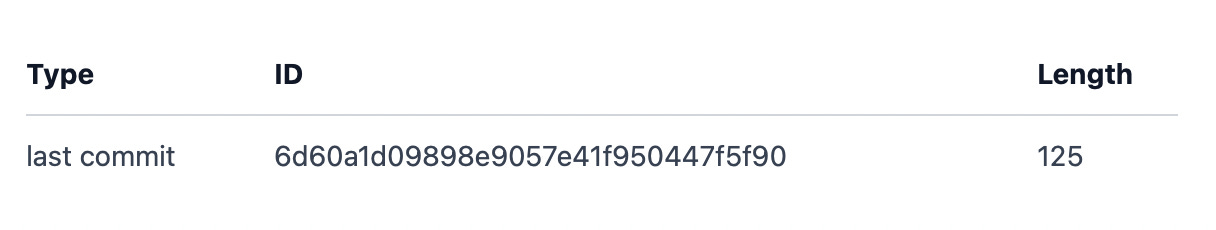

In fact, it contains the last commit ID and the length of the last entry:

There's a dedicated marshaler object for the last commit data:

func (m *LastCommitMarshaler) MarshalBinary() ([]byte, error) {

buf := bytes.Buffer{}

typeMarshaler := encoding.NewValueMarshaler(types.TypeWALLastIDItem)

typeBuf, err := typeMarshaler.MarshalBinary()

if err != nil {

return nil, fmt.Errorf("WAL.Commit: %w", err)

}

buf.Write(typeBuf)

// ...

}It works as every other marshaler in the system.

The last step is actually calling commit in Table.Insert:

func (t *Table) Insert(record map[string]interface{}) (int, error) {

if _, err := t.file.Seek(0, io.SeekEnd); err != nil {

return 0, fmt.Errorf("Table.Insert: %w", err)

}

if err := t.validateColumns(record); err != nil {

return 0, fmt.Errorf("Table.Insert: %w", err)

}

walEntry, err = t.wal.Append(

walencoding.OpInsert,

t.Name,

buf.Bytes()

)

if err != nil {

return 0, fmt.Errorf("Table.Insert: %w", err)

}

n, err := t.file.Write(buf.Bytes())

if err != nil {

return 0, fmt.Errorf("Table.Insert: %w", err)

}

if err := t.wal.Commit(walEntry); err != nil {

return 0, fmt.Errorf("Table.Insert: %w", err)

}

return 1, nil

}This is the exact flow we discussed in the introduction:

Appending to the log

Writing into the table file

Commiting

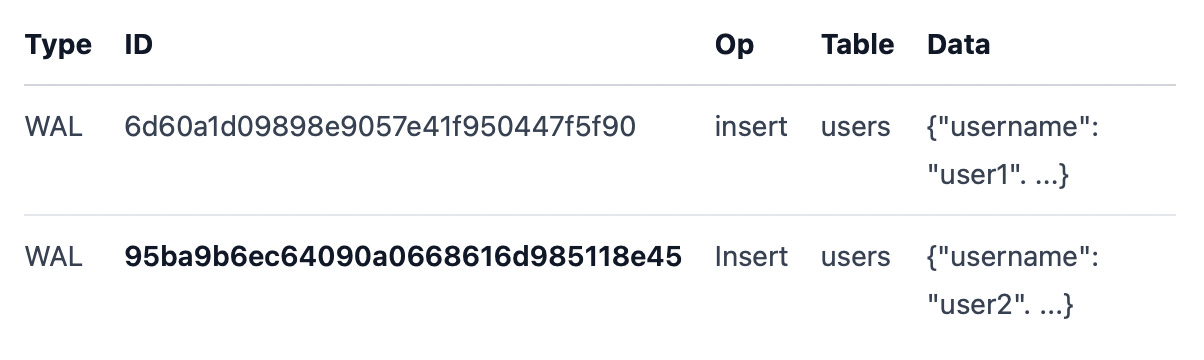

If t.file.Write() fails the given ID is never committed. In the log file we have data like this:

But the last commit file contains a row like this:

The newly inserted 95ba9b6ec64090a0668616d985118e45 entry is not committed. When starting the database server it knows it needs to restore ID 95ba9b6ec64090a0668616d985118e45 and write it to the table file.

In the book, we’re going to implement the entire restore process as well.

Building a database engine

This whole article comes from my book - Building a database engine

It's going to be available on the 22nd of April. It discusses topics like:

Storage layer

Insert/Update/Delete/Select

Write Ahead Log (WAL) and fault tolerancy

B-Tree indexes

Hash-based full-text indexes

Page-level caching with LRU

...and more

Check it out and pre-order to get the best price: